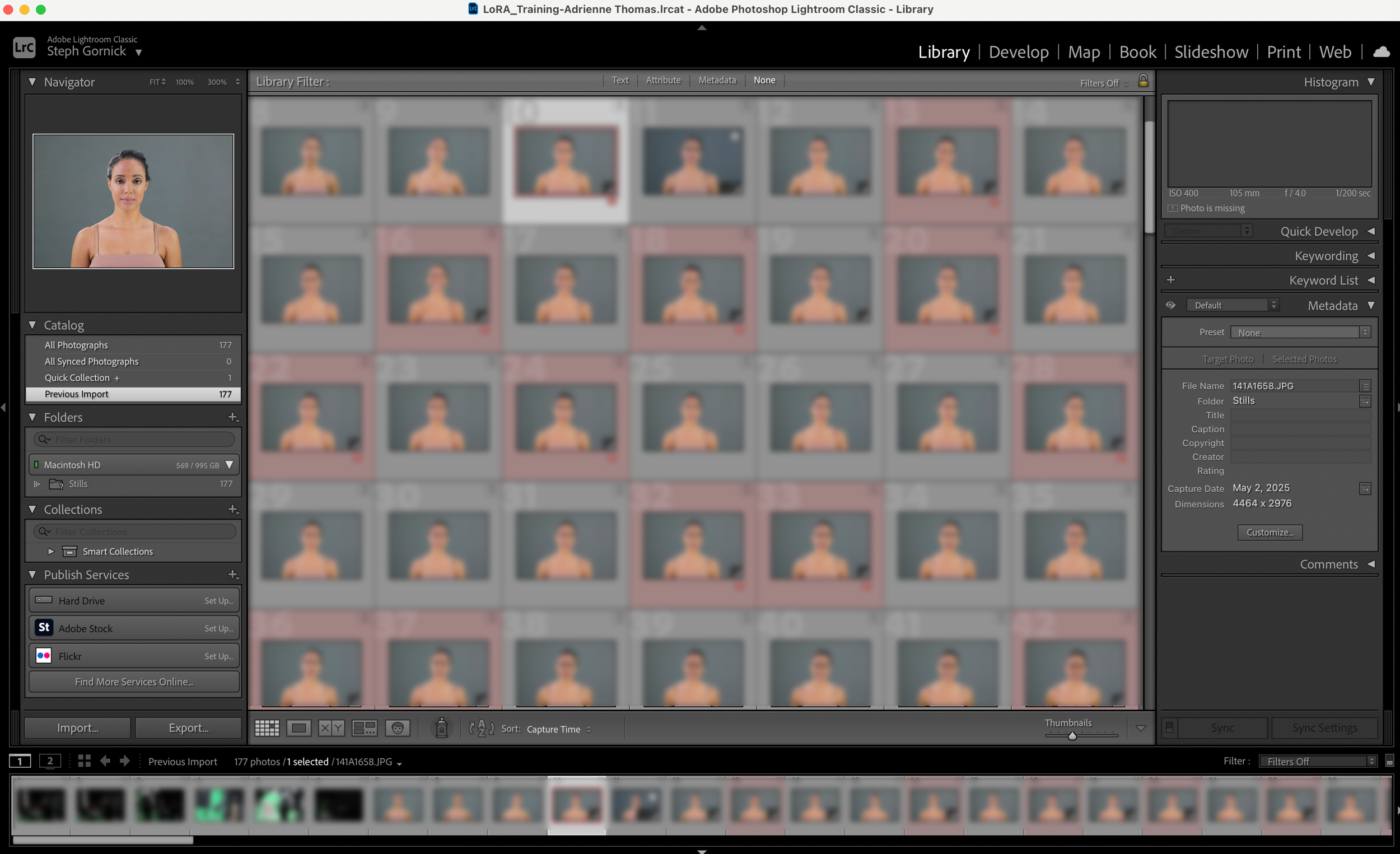

Behind the Scenes of My LoRA Training Workflow: A Balance of Automation and Curation

When people think of training LoRA (Low-Rank Adaptation) models, they often imagine it’s as simple as throwing a bunch of images into a machine and letting the magic happen. The reality? It’s far more hands-on — and often more human — than most expect.

After the initial image production and curation phase, the real work begins:

Data cleaning, correction, and captioning.

This stage is meticulous, repetitive, and highly manual. Every image needs to be vetted for quality, consistency, and relevance. Errors like inconsistent labeling, poorly cropped visuals, and irrelevant frames can derail the effectiveness of the final model.

To streamline this process, I built a privately trained GPT model — a tailored assistant designed to help accelerate and structure the captioning and dataset labeling. But even with this tool in place, the work doesn’t become hands-off. In fact, it requires a new kind of attention: directing the AI to align with the visual context and language specificity required for LoRA training.

This means:

Redirecting the model when it overgeneralizes or misses subtle visual cues.

Ensuring consistency in naming conventions, poses, character identities, and descriptive detail.

Watching for bias — because even with AI assistance, it’s easy to perpetuate overused or inaccurate descriptors.

Manually verifying metadata to make sure each caption reflects what’s actually in the frame, not what the AI assumes is there.

Training a good LoRA model isn’t just about feeding in data. It’s about building a clean, descriptive, and consistent foundation that the model can learn from. The AI helps — immensely — but it’s still a tool that needs active creative direction.

In many ways, this process feels like editing film footage or building a motion graphics sequence: you start with raw assets, but the power lies in the refinement, the pacing, the frame-by-frame decisions. The AI might be a collaborator, but you’re still the director.

If you’re exploring LoRA training and feel frustrated by how much effort goes into prep — just know, it’s not a flaw in your process. It is the process. And while it’s tempting to rush to the generation phase, your future outputs are only as good as the intention you put into the dataset.